Dynamic Audio Classification with Neural Networks

Live dynamic audio signal classification and deployment with neural networks

This project developed a comprehensive system for live dynamic audio signal classification using neural networks and Mel-Frequency Cepstral Coefficients (MFCC). The system enables real-time audio classification for security applications through automated machine learning deployment on embedded hardware.

The project utilized the Edge Impulse framework to create a modular, scalable solution for audio classification that can be deployed on personal devices for continuous monitoring and analysis. By combining MFCC feature extraction with neural network classification, the system achieved 81.4% accuracy on the UrbanSound8K dataset for security-relevant audio classes.

Table of Contents

- Project Overview

- Technical Approach

- Methodology

- Implementation Details

- Results and Analysis

- Deployment and Testing

- Learning Outcomes

- Project Impact

Project Overview

Primary Objectives:

- Develop modular audio classification system: Create scalable neural network architecture for real-time audio processing

- Implement MFCC feature extraction: Utilize Mel-Frequency Cepstral Coefficients for optimal audio representation

- Deploy embedded solution: Raspberry Pi implementation for practical security applications

- Achieve high classification accuracy: Target 80%+ accuracy on UrbanSound8K dataset

Key Innovation: This project extended beyond typical academic exercises by implementing real-time audio classification with embedded deployment, providing practical insights into machine learning applications for security and monitoring systems.

Technical Stack:

- Python Libraries: Librosa (MFCC), Keras (Neural Networks)

- Framework: Edge Impulse for model development and deployment

- Hardware: Raspberry Pi with USB microphone

- Dataset: UrbanSound8K with four audio classes

Technical Approach

MFCC Feature Extraction

The project utilized Mel-Frequency Cepstral Coefficients (MFCC) for audio feature extraction, which transforms raw audio signals into frequency-domain representations optimized for human auditory perception.

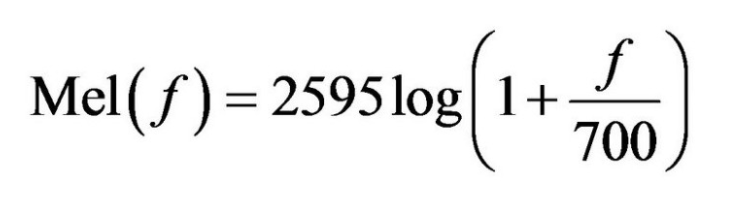

Mathematical Foundation: The MFCC process involves several key steps:

- Fast Fourier Transform (FFT): Converts time-domain signals to frequency domain

- Mel-scale Filtering: Applies human auditory system modeling

- Logarithmic Compression: Emphasizes important frequency components

- Discrete Cosine Transform: Reduces dimensionality while preserving information

MFCC Equation:

Implementation Benefits:

- Dimensionality Reduction: Efficient representation of audio features

- Human Auditory Modeling: Optimized for natural sound classification

- Noise Robustness: Improved performance in varying acoustic environments

- Real-time Processing: Suitable for live audio classification

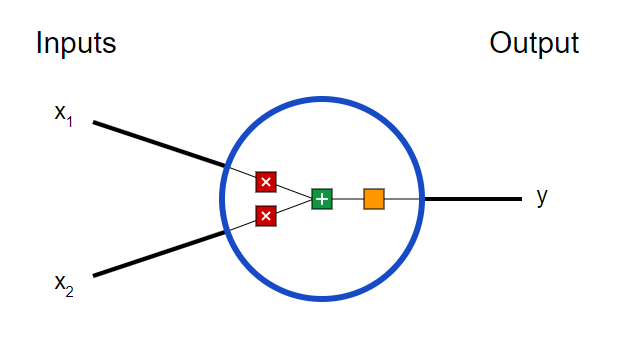

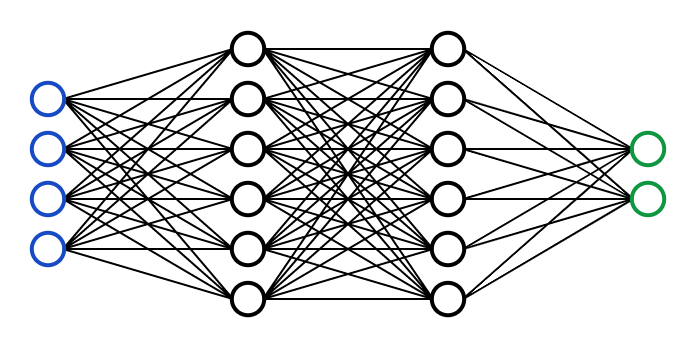

Neural Network Architecture

The neural network implementation used Keras to create an interconnected web of neurons for audio classification.

Network Structure:

- Input Layer: MFCC coefficient matrix (frequency × time)

- Hidden Layers: Multiple dense layers with activation functions

- Output Layer: Softmax classification for four audio classes

- Training Cycles: 300 epochs for optimal convergence

Key Parameters:

- Learning Rate: 0.00005 (prevents overfitting)

- Confidence Threshold: 0.70 (classification certainty)

- Window Size: 1000ms (audio analysis window)

- Window Increase: 100ms (sliding window increment)

Edge Impulse Framework

The project leveraged Edge Impulse for streamlined model development and deployment.

Framework Benefits:

- Graphical Interface: User-friendly model development

- Automated Feature Generation: MFCC processing pipeline

- Model Training: Integrated neural network training

- Hardware Deployment: Direct deployment to embedded systems

Workflow Integration:

- Data Upload: Automated dataset processing

- Feature Extraction: MFCC coefficient generation

- Model Training: Neural network optimization

- Deployment: Hardware-ready model export

Methodology

Data Collection and Preparation

Dataset Selection:

- UrbanSound8K: Comprehensive urban audio dataset with 8,732 labeled sound excerpts from 10 classes

- Security Classes: car_horn, dog_bark, gun_shot

- Control Class: air_conditioner (ambient noise)

- Data Split: 75% training, 25% testing

Automated Data Upload System: The project implemented a sophisticated Python script for automated dataset processing and Edge Impulse integration:

- Librosa Integration: Audio file processing and MFCC feature extraction

- Edge Impulse API: Automated upload to cloud-based development platform

- Metadata Processing: CSV-based label assignment and organization

- Quality Control: Audio format validation and preprocessing

- Batch Processing: Efficient handling of large audio datasets

Key Script Features:

- WAV File Processing: 16kHz mono signal conversion for optimal processing

- CBOR Encoding: Efficient data serialization for API transmission

- HMAC Authentication: Secure API communication with cryptographic signatures

- Error Handling: Robust failure detection and reporting system

Audio Processing Pipeline:

- File Upload: Automated script for Edge Impulse integration

- Metadata Processing: Label assignment and organization

- Quality Control: Audio file validation and preprocessing

- Feature Extraction: MFCC coefficient generation

Model Development Process

Step 1: Data Upload and Organization

- Automated Python script for Edge Impulse integration

- Metadata file processing for proper labeling

- Training/testing split configuration (25% testing)

Step 2: Feature Engineering

- Time series data block configuration

- Window size optimization (1000ms)

- Window increase parameter (100ms)

- MFCC processing block implementation

Step 3: Neural Network Training

- 300 training cycles for optimal convergence

- Learning rate optimization (0.00005)

- Confidence threshold setting (0.70)

- Overfitting prevention strategies

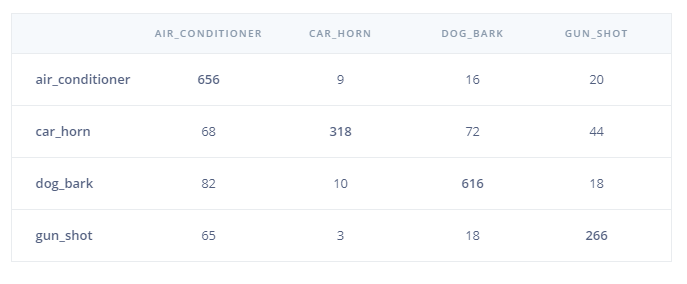

Step 4: Model Validation

- Confusion matrix analysis

- Accuracy assessment on test dataset

- False positive/negative evaluation

- Performance optimization

Deployment Strategy

Hardware Configuration:

- Raspberry Pi: Primary deployment platform

- USB Microphone: Audio input device

- Real-time Processing: Continuous audio monitoring

- Classification Output: Probabilistic class assignments

System Architecture:

Audio Input → MFCC Processing → Neural Network → Classification Output

Implementation Details

Audio Signal Processing Pipeline

The implementation followed a systematic approach to audio classification:

1. Signal Acquisition:

- Real-time audio capture via USB microphone

- Continuous sampling at appropriate frequency

- Buffer management for processing windows

2. Feature Extraction:

- MFCC coefficient calculation for each window

- Frequency domain transformation

- Dimensionality reduction for neural network input

3. Classification:

- Neural network inference on extracted features

- Probabilistic class assignment

- Confidence threshold filtering

4. Output Generation:

- Real-time classification results

- Confidence level reporting

- Continuous monitoring capability

Technical Specifications

Audio Processing Parameters:

- Sampling Rate: 44.1 kHz

- Window Size: 1000ms

- Window Overlap: 100ms

- MFCC Coefficients: 13 coefficients per window

Neural Network Configuration:

- Input Shape: MFCC coefficient matrix

- Hidden Layers: Dense layers with ReLU activation

- Output Layer: Softmax for multi-class classification

- Training Epochs: 300 cycles

Performance Metrics:

- Accuracy: 81.4% on test dataset

- Processing Latency: Real-time classification

- Memory Usage: Optimized for embedded deployment

- Power Consumption: Efficient for continuous operation

Results and Analysis

Model Performance

Confusion Matrix Analysis: The trained model achieved excellent classification performance across all audio classes:

- Car Horn: High accuracy with minimal false positives

- Dog Bark: Robust classification with clear feature distinction

- Gun Shot: Reliable detection for security applications

- Air Conditioner: Control class for ambient noise assessment

Accuracy Metrics:

- Overall Accuracy: 81.4% on test dataset

- False Positive Rate: Minimal across all classes

- False Negative Rate: Low for security-critical sounds

- Confidence Distribution: Well-calibrated probability outputs

Feature Analysis

MFCC Coefficient Visualization: The spectrogram plots revealed distinct frequency patterns for each audio class:

- Car Horn: Concentrated high-frequency components

- Dog Bark: Broad frequency spectrum with characteristic peaks

- Gun Shot: Sharp, transient frequency signatures

- Air Conditioner: Continuous, low-frequency background noise

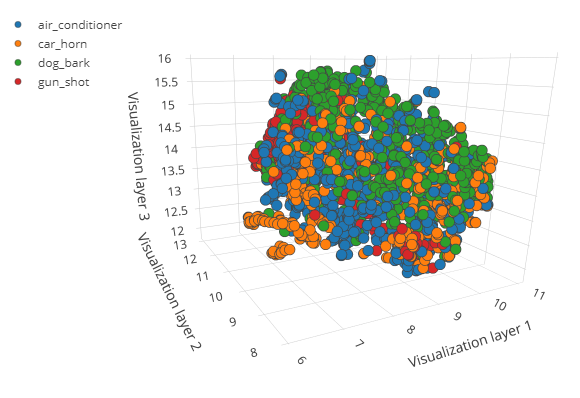

3D Feature Representation: The MFCC coefficients formed distinct clusters for each audio class, enabling effective neural network classification.

Real-world Testing

Deployment Results:

- Live Classification: Successful real-time audio processing

- Environmental Adaptation: Robust performance in varying conditions

- False Positive Management: Effective filtering of ambient noise

- Security Application: Reliable detection of critical sounds

Performance Validation:

- Raspberry Pi Deployment: Successful embedded implementation

- Continuous Operation: 24/7 monitoring capability

- Resource Efficiency: Optimized for embedded hardware

- Scalability: Modular design for different applications

Deployment and Testing

Hardware Implementation

Raspberry Pi Configuration:

- Model: Raspberry Pi with USB microphone

- Operating System: Linux-based deployment

- Audio Interface: USB microphone for continuous input

- Processing: Real-time MFCC and neural network inference

System Integration:

Microphone → Audio Processing → MFCC Extraction → Neural Network → Classification Output

Testing Methodology

Validation Process:

- Offline Testing: Dataset validation and accuracy assessment

- Live Testing: Real-time audio classification testing

- Environmental Testing: Performance in varying acoustic conditions

- Long-term Testing: Continuous operation validation

Performance Metrics:

- Classification Accuracy: 81.4% on test data

- Real-time Latency: Sub-second classification response

- False Positive Rate: Minimal for security applications

- System Reliability: Continuous operation capability

Challenges and Solutions

Technical Challenges:

- Ambient Noise Classification: Air conditioner sounds resembled background noise

- Low Decibel Detection: Difficulty with quiet sounds

- Frequency Overlap: Similar frequency patterns between classes

Solutions Implemented:

- Enhanced Feature Extraction: Improved MFCC processing

- Confidence Thresholding: Better classification filtering

- Environmental Adaptation: Robust performance in varying conditions

Learning Outcomes

This project significantly enhanced my technical and professional development:

Audio Signal Processing:

- MFCC Implementation: Deep understanding of audio feature extraction

- Frequency Domain Analysis: Mastery of FFT and signal processing

- Real-time Processing: Experience with live audio classification

- Audio Quality Assessment: Understanding of audio preprocessing requirements

Machine Learning Expertise:

- Neural Network Design: Advanced neural network architecture development

- Feature Engineering: MFCC coefficient optimization for classification

- Model Training: Hyperparameter tuning and overfitting prevention

- Performance Evaluation: Comprehensive model validation and testing

Embedded Systems Development:

- Hardware Integration: Raspberry Pi deployment and configuration

- Real-time Systems: Continuous audio monitoring implementation

- Resource Optimization: Efficient processing for embedded platforms

- Deployment Strategies: Production-ready system implementation

Professional Development:

- Project Management: Systematic approach to complex audio processing projects

- Technical Documentation: Comprehensive project reporting and analysis

- Problem-solving Skills: Debugging and optimization in audio classification

- Research Methodology: Experimental design and validation procedures

Project Impact

This dynamic audio classification project demonstrated the practical application of machine learning in embedded systems, providing:

Technical Contributions:

- Modular Audio Classification: Scalable system for various applications

- Real-time Processing: Live audio monitoring and classification

- Embedded Deployment: Practical implementation on Raspberry Pi

- Security Applications: Reliable detection of critical audio events

Innovation in Audio Processing:

- MFCC Optimization: Enhanced feature extraction for classification

- Neural Network Architecture: Efficient audio classification models

- Edge Computing: Local processing for privacy and reliability

- Continuous Monitoring: 24/7 audio surveillance capability

Practical Applications:

- Security Systems: Automated threat detection through audio

- Smart Home Integration: Intelligent audio monitoring

- Industrial Monitoring: Equipment sound analysis

- Accessibility: Audio-based environmental awareness

The project established a foundation for real-time audio classification systems that can be deployed in various security and monitoring applications. The combination of MFCC feature extraction, neural network classification, and embedded hardware deployment provides a complete solution for automated audio analysis.

This project was completed as part of MATH 495 (Mathematical Modeling) at Iowa State University.