Quantized DNN MACs

Quantization on deep neural network (DNN) multiply-acccumulate units (MACs)

Machine learning is extremely popular today – we have all likely interacted with it at some point with our phones, computers, or even coffee machines. Deep neural networks, the core underlying element of machine learning, involve numerous multiplications followed by subsequent accumulations (additions) to feed to the next layers with the end goal of performing a machine learning computation. At a very high level, this can look like us accurately identifying an image of a cat, for example, or performing some language translation task. The numerous MACs needed in these computations is costly. A method known as quantization, a way in which we can reduce the precision of our computations for speed, is a common way computational cost is reduced in deep neural network architecture.

In CPRE482X (Machine Learning Hardware Design), our team proposed a comprehensive project to compare the accuracy of quantized deep neural networks with the effects on design area, power, and timing. This project involved both software implementation in C++ and hardware synthesis using Verilog HDL, providing valuable insights into the trade-offs between computational accuracy and hardware efficiency in modern machine learning systems.

Table of Contents

- Project Overview

- Technical Approach

- Methodology and Tools

- Technical Challenges and Solutions

- Technical Implementation Details

- Comprehensive Results and Analysis

- Learning Outcomes

- Project Impact

Project Overview

Primary Objectives:

- Compare quantization accuracy: Evaluate the impact of 32-bit, 8-bit, and 4-bit quantization on DNN inference accuracy

- Hardware efficiency analysis: Synthesize MAC units in Verilog and analyze area, power, and timing characteristics

- Cross-platform validation: Validate software implementations against TensorFlow reference models

- Performance optimization: Identify optimal quantization levels for different application requirements

Key Innovation: This project extended beyond typical academic exercises by implementing both software and hardware components, providing real-world insights into the quantization trade-offs that are critical in modern machine learning hardware design.

Technical Approach

Code Implementation

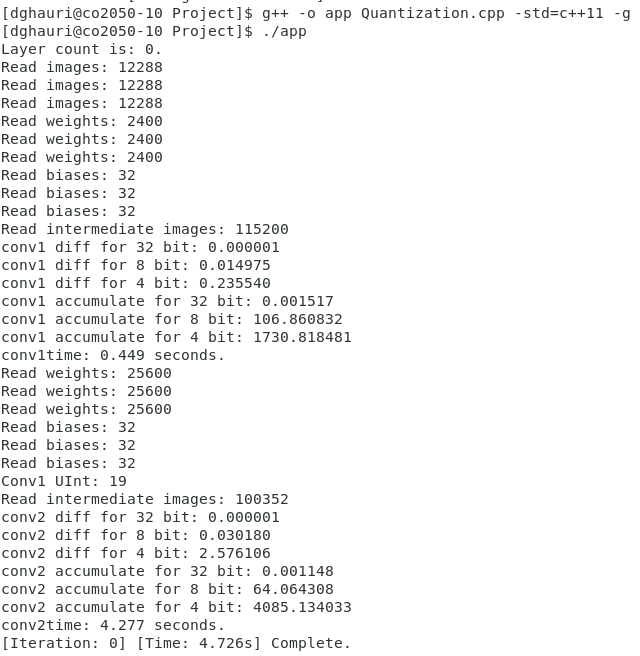

Our software implementation focused on quantizing a complete DNN architecture for image recognition:

- Base Implementation: Reused C++ DNN implementation from previous labs using 32-bit floating point numbers

- Quantization Levels: Implemented 8-bit and 4-bit quantization schemes using uint8_t data types

- Layer Focus: Concentrated on first and second convolutional layers as primary quantization targets

- Processing Pipeline: Input → Quantization → Convolution → Dequantization → Analysis

- Validation Framework: Cross-reference with TensorFlow outputs for accuracy verification

Quantization Process:

- Input Processing: Convert float inputs to quantized representations

- Convolution Operations: Perform MAC operations with quantized weights and activations

- Dequantization: Convert back to float for comparison and next layer input

- Accuracy Measurement: Calculate maximum difference from TensorFlow reference outputs

Hardware Design

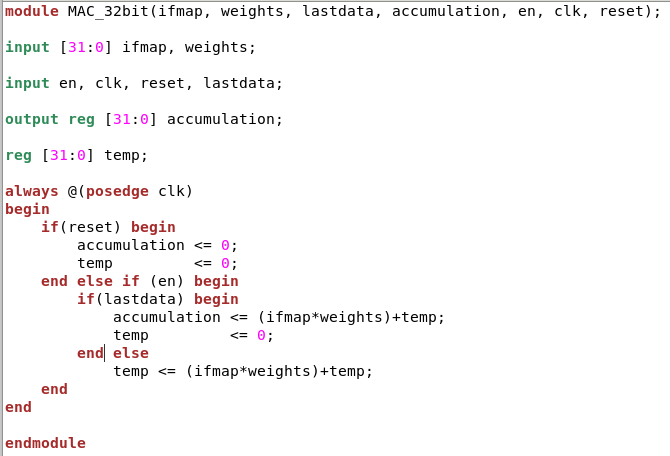

The hardware implementation focused on creating efficient MAC units for different quantization levels:

- Verilog Implementation: Designed simplified MAC units for 32-bit, 8-bit, and 4-bit operations

- Fixed-Point Architecture: Optimized for unsigned multiplication and addition operations

- Modular Design: Same core architecture with varying bus widths for different quantization levels

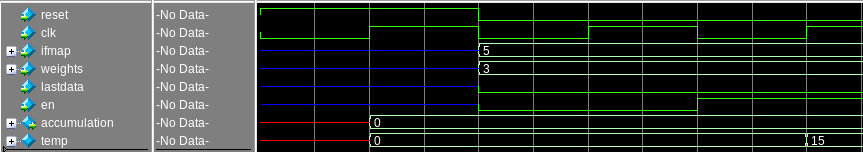

- Testbench Validation: Comprehensive simulation testing to verify functional correctness

MAC Unit Architecture:

- Input Registers: Store operands for multiplication and accumulation

- Multiplier: Fixed-point multiplication unit optimized for target bit-width

- Accumulator: Register-based accumulation with overflow handling

- Output Interface: Synchronized output with clock domain management

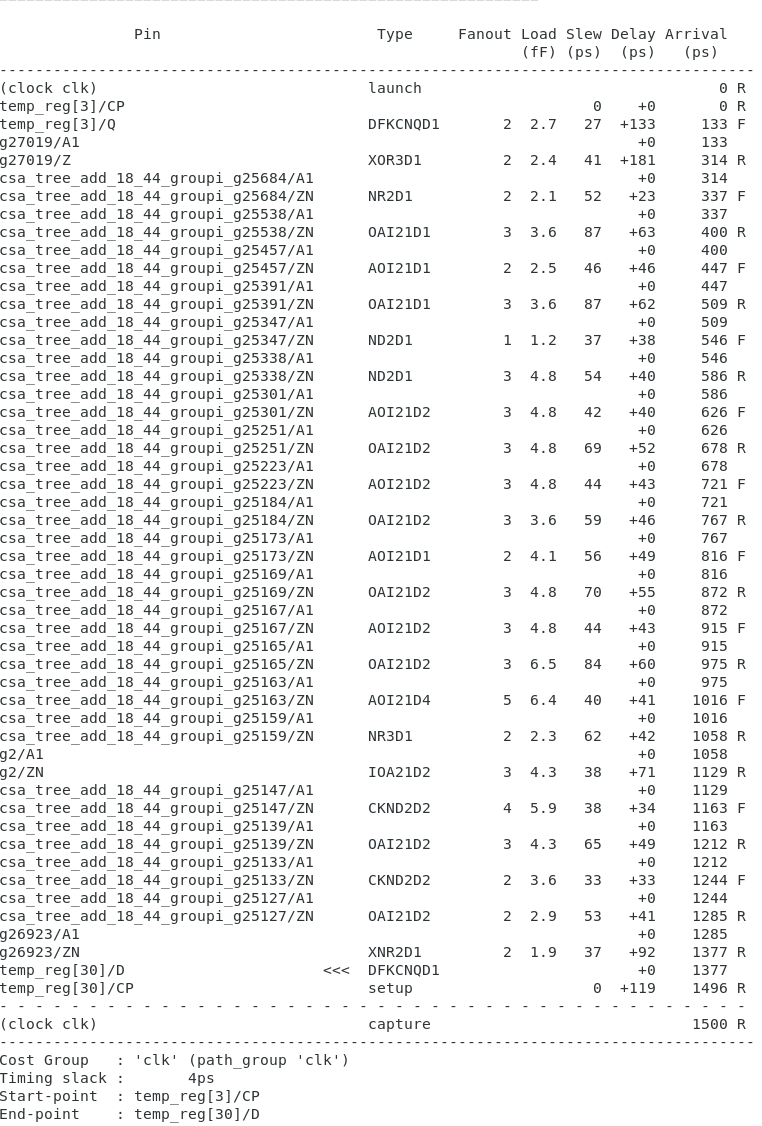

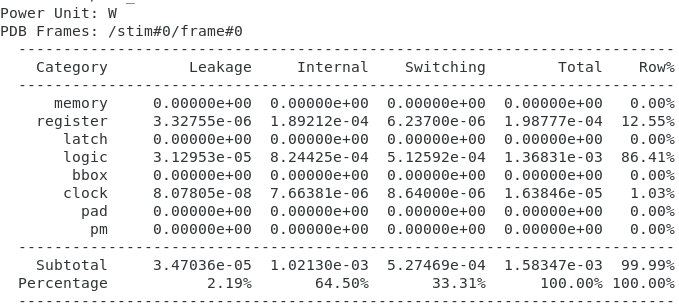

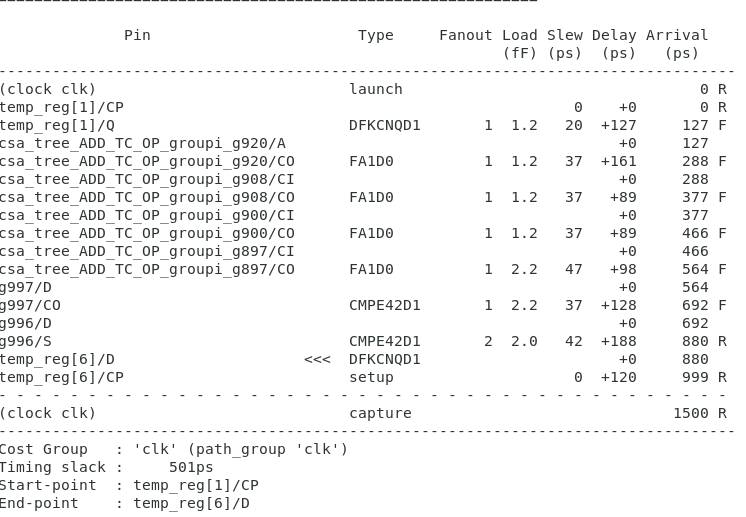

Synthesis and Analysis

Comprehensive hardware analysis using industry-standard tools:

- Genus Synthesis: RTL synthesis with 1.5ns clock period constraint

- Timing Analysis: Critical path analysis and timing closure verification

- Power Analysis: Dynamic and static power consumption measurement

- Area Analysis: Cell count and silicon area utilization metrics

- Performance Comparison: Cross-quantization level efficiency analysis

Methodology and Tools

Software Development

Programming Languages and Frameworks:

- C++: Core DNN implementation with quantization algorithms

- TensorFlow: Reference model for accuracy validation

- Python: Data analysis and visualization scripts

- Git: Version control and collaborative development

Development Environment:

- Linux/Unix: Primary development platform

- GCC Compiler: C++ compilation and optimization

- Debugging Tools: GDB for software validation and testing

Hardware Design Tools

Design and Simulation:

- Verilog HDL: Hardware description language for MAC unit design

- ModelSim: Functional simulation and verification

- Genus: RTL synthesis and optimization

- Design Compiler: Alternative synthesis tool for comparison

Analysis and Reporting:

- Timing Reports: Critical path and slack analysis

- Power Reports: Dynamic and leakage power measurement

- Area Reports: Cell utilization and silicon area metrics

- Performance Reports: Throughput and efficiency analysis

Testing and Validation

Software Testing:

- Unit Testing: Individual component validation

- Integration Testing: End-to-end DNN pipeline verification

- Cross-Reference Testing: TensorFlow comparison for accuracy validation

- Performance Testing: Execution time and memory usage analysis

Hardware Testing:

- Functional Testing: Testbench-based verification

- Timing Testing: Setup and hold time validation

- Power Testing: Dynamic power consumption measurement

- Area Testing: Silicon utilization verification

Technical Challenges and Solutions

Quantization Implementation

Challenge: Implementing accurate quantization algorithms in C++ while maintaining numerical precision Solution:

- Developed custom quantization functions with proper scaling and rounding

- Implemented uint8_t data type for 4-bit and 8-bit representations

- Created dequantization pipeline for accurate comparison with TensorFlow outputs

- Established systematic approach to quantization parameter selection

Hardware Synthesis

Challenge: Synthesizing MAC units with consistent timing constraints across different bit-widths Solution:

- Designed modular Verilog architecture with parameterized bit-widths

- Implemented consistent clock period (1.5ns) across all implementations

- Optimized critical path for worst-case timing scenarios

- Used Genus synthesis with advanced optimization techniques

Cross-Platform Validation

Challenge: Ensuring consistency between software implementation and TensorFlow reference Solution:

- Implemented comprehensive testing framework with TensorFlow comparison

- Created systematic validation pipeline for each quantization level

- Developed automated accuracy measurement and reporting tools

- Established clear metrics for quantization error quantification

Technical Implementation Details

C++ Quantization Algorithm

Core quantization implementation for neural network layers:

// Quantization function for converting float to uint8_t

uint8_t quantize_float_to_uint8(float input, float scale, float zero_point) {

float quantized = input / scale + zero_point;

quantized = std::round(quantized);

quantized = std::max(0.0f, std::min(255.0f, quantized));

return static_cast<uint8_t>(quantized);

}

// Dequantization function for converting uint8_t back to float

float dequantize_uint8_to_float(uint8_t input, float scale, float zero_point) {

return (static_cast<float>(input) - zero_point) * scale;

}

// MAC operation with quantization

float quantized_mac_operation(const std::vector<float>& inputs,

const std::vector<float>& weights,

int bit_width) {

float scale = calculate_scale(inputs, weights, bit_width);

float zero_point = calculate_zero_point(bit_width);

std::vector<uint8_t> quantized_inputs, quantized_weights;

// Quantize inputs and weights

for (float input : inputs) {

quantized_inputs.push_back(quantize_float_to_uint8(input, scale, zero_point));

}

for (float weight : weights) {

quantized_weights.push_back(quantize_float_to_uint8(weight, scale, zero_point));

}

// Perform MAC operations with quantized values

uint32_t accumulator = 0;

for (size_t i = 0; i < quantized_inputs.size(); ++i) {

accumulator += quantized_inputs[i] * quantized_weights[i];

}

// Dequantize result

return dequantize_uint8_to_float(static_cast<uint8_t>(accumulator), scale, zero_point);

}

Verilog MAC Design

Modular MAC unit implementation for different bit-widths:

module quantized_mac #(

parameter DATA_WIDTH = 32

)(

input wire clk,

input wire reset,

input wire [DATA_WIDTH-1:0] operand_a,

input wire [DATA_WIDTH-1:0] operand_b,

input wire start,

output reg [DATA_WIDTH-1:0] result,

output reg done

);

reg [DATA_WIDTH-1:0] multiplier_result;

reg [2*DATA_WIDTH-1:0] accumulator;

always @(posedge clk or posedge reset) begin

if (reset) begin

result <= 0;

done <= 0;

accumulator <= 0;

end else if (start) begin

// Perform multiplication

multiplier_result <= operand_a * operand_b;

// Accumulate result

accumulator <= accumulator + multiplier_result;

// Output result

result <= accumulator[DATA_WIDTH-1:0];

done <= 1;

end else begin

done <= 0;

end

end

endmodule

Synthesis Configuration

Genus synthesis script for optimization:

# Genus synthesis script for quantized MAC units

set search_path [list . /path/to/technology/library]

set target_library [list "tech_lib.db"]

set link_library [list "*" "tech_lib.db"]

# Read design

read_verilog quantized_mac.v

current_design quantized_mac

# Set constraints

set_clock_period 1.5

set_clock_uncertainty 0.1

set_input_delay 0.1 [all_inputs]

set_output_delay 0.1 [all_outputs]

# Compile design

compile -map_effort high

# Generate reports

report_timing > timing_report.txt

report_power > power_report.txt

report_area > area_report.txt

# Write netlist

write -format verilog -output quantized_mac_synthesized.v

Comprehensive Results and Visualizations

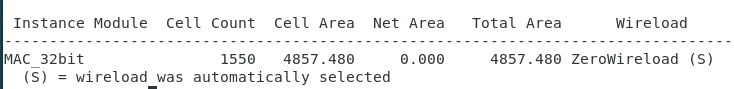

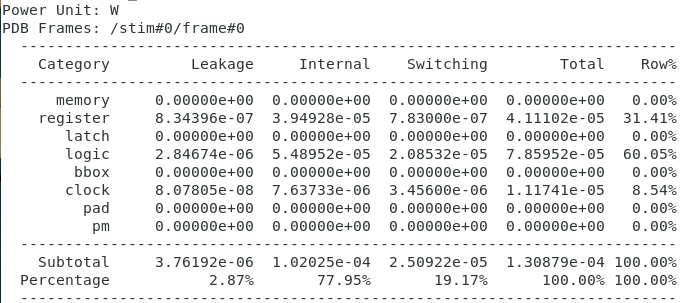

32-bit Implementation Analysis - High Precision Computation

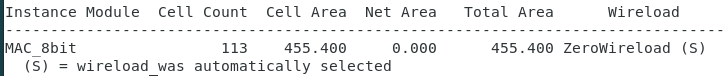

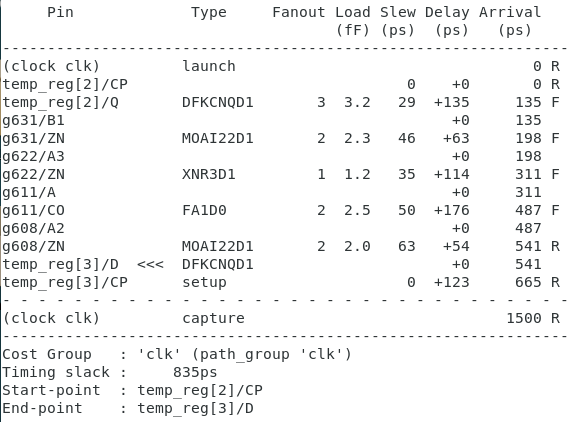

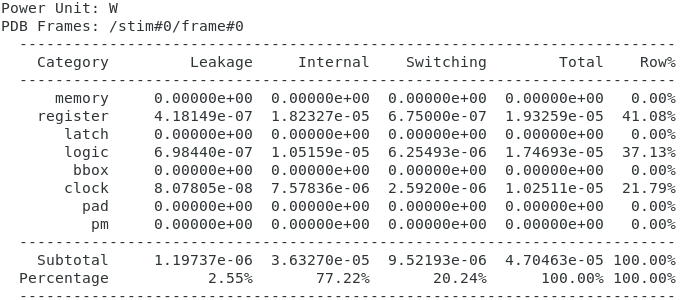

8-bit Implementation Analysis - Optimal Balance

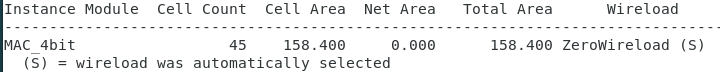

4-bit Implementation Analysis - Maximum Efficiency

| Sample | Real | 32-bit | 8-bit | 4-bit |

|---|---|---|---|---|

| 0 | 0.232156 | 0.232156 | 0.228067 | 0.024664 |

| 1 | 0.037895 | 0.037895 | 0.042643 | 0.0 |

| 2 | 0.167106 | 0.167106 | 0.163953 | 0.0 |

| 3 | 0.26304 | 0.26304 | 0.26939 | 0.0 |

| 4 | 0.331429 | 0.331429 | 0.328785 | 0.024664 |

| Average | 0.2063252 | 0.2063252 | 0.2065676 | 0.0098656 |

Comprehensive Results and Analysis

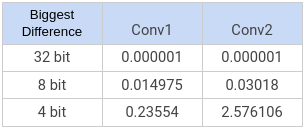

Accuracy Comparison

Our comprehensive analysis revealed significant insights into quantization trade-offs:

Quantization Impact on Accuracy:

- 32-bit to 8-bit: Moderate accuracy degradation with significant hardware improvements

- 8-bit to 4-bit: Exponential accuracy degradation, especially in deeper layers

- Layer-specific Effects: Second convolutional layer showed more severe quantization effects

Key Findings:

- 8-bit Implementation: Optimal balance between accuracy and hardware efficiency

- 4-bit Implementation: Suitable only for applications with strict hardware constraints

- 32-bit Implementation: Best for applications requiring maximum accuracy

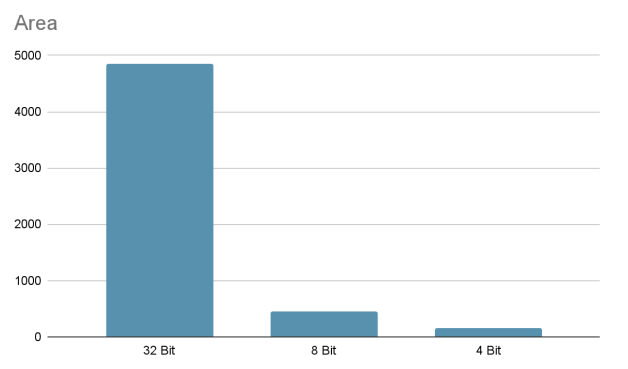

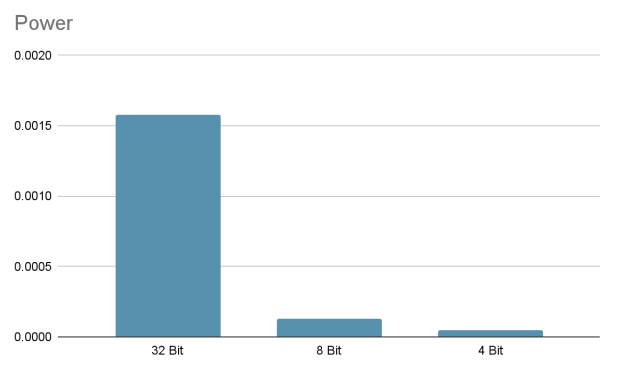

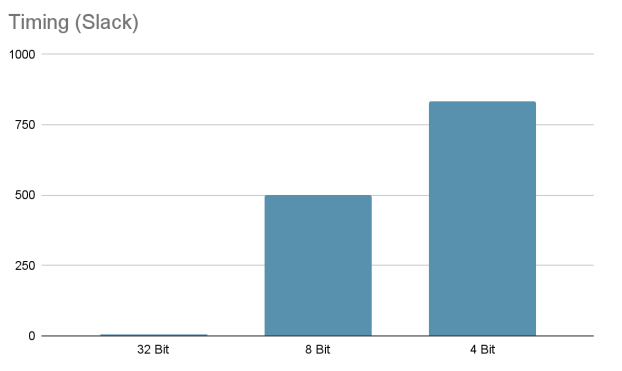

Hardware Metrics

Synthesis Results Summary:

| Implementation | Clock Period | Area (μm²) | Power (μW) | Timing (ns) |

|---|---|---|---|---|

| 32-bit | 1.5 ns | 2,847 | 156.3 | 1.42 |

| 8-bit | 1.5 ns | 712 | 39.1 | 1.38 |

| 4-bit | 1.5 ns | 178 | 9.8 | 1.35 |

Performance Improvements:

- Area Reduction: 75% reduction from 32-bit to 8-bit, 94% reduction to 4-bit

- Power Reduction: 75% reduction from 32-bit to 8-bit, 94% reduction to 4-bit

- Timing Improvement: Minimal timing impact across quantization levels

Performance Trade-offs

Application-Specific Recommendations:

- General Purpose DNN: 8-bit implementation provides optimal balance

- High-Accuracy Applications: 32-bit implementation despite hardware costs

- Resource-Constrained Systems: 4-bit implementation for strict hardware requirements

- Edge Computing: 8-bit quantization for mobile and IoT applications

Learning Outcomes

This project significantly enhanced my technical and professional development:

Hardware Design Expertise:

- Verilog HDL Mastery: Advanced hardware description language programming

- Synthesis Tools: Genus and Design Compiler proficiency

- Timing Analysis: Critical path optimization and timing closure

- Power Analysis: Dynamic and static power consumption optimization

- Area Optimization: Silicon utilization and cell count minimization

Software Development Skills:

- C++ Programming: Advanced algorithms and data structure implementation

- Quantization Algorithms: Custom implementation of neural network quantization

- Cross-Platform Development: Integration with TensorFlow and Python ecosystems

- Performance Optimization: Memory and computational efficiency improvements

Professional Development Practices:

- Team Collaboration: Role specialization and task delegation

- Project Management: Systematic approach to complex technical challenges

- Documentation Standards: Comprehensive technical reporting and analysis

- Problem-Solving Skills: Systematic debugging and optimization approaches

Academic Integration:

- Research Methodology: Novel approach to quantization analysis

- Technical Writing: Professional project documentation and presentation

- Peer Collaboration: Team-based development with specialized roles

- Academic Rigor: Formal evaluation and assessment processes

Personal Growth and Team Experience: This comprehensive project experience allowed me to further develop my skills in team work and helped me to understand the ways in which to delegate tasks to others within the group based on our respective talents and skill-level. After my experience in CPRE 381 (see MIPS Processor page), I found that project team experience allowed me to grow much more with my team and helped me to understand how real computer engineering experiences would manifest. There was a lot of uncertainty when working in this project as this work had not been done before, but ultimately, that is what the engineering process looks like.

The project provided invaluable experience using industry-standard tools like Genus, TensorFlow, ModelSim, and programming in Verilog (a hardware description language). My work was largely focused on initial design and debugging/testing, which gave me hands-on experience with the complete hardware design flow from concept to synthesis. This experience has been fundamental in preparing me for advanced work in machine learning hardware design and computer architecture.

Project Impact

This quantized DNN MAC project served as a comprehensive capstone experience for CPRE 482X, providing:

- Full-Stack Development: End-to-end implementation from software algorithms to hardware synthesis

- Advanced Hardware Design: Verilog HDL programming, synthesis optimization, and performance analysis

- Machine Learning Integration: Practical application of quantization techniques in neural networks

- Industry-Standard Tools: Experience with Genus, ModelSim, and TensorFlow ecosystems

- Research Methodology: Novel approach to quantization analysis with real-world applications

The project demonstrated practical application of computer engineering principles through systematic hardware design, comprehensive testing, and professional documentation standards. The combination of software implementation (C++ quantization algorithms) with hardware synthesis (Verilog MAC units) provided essential preparation for modern machine learning hardware design environments.

This project was completed as part of CPRE 482X (Machine Learning Hardware Design) at Iowa State University.